Hi,

I am testing computations on the Google Cloud Deployment (Server-options: 16 CPUs), and want to ask for help with interpreting the error that shows up on several of my delayed-dask-processes, so that I can understand more of the underlying process with dask on Pangeo, and can troubleshoot this further.

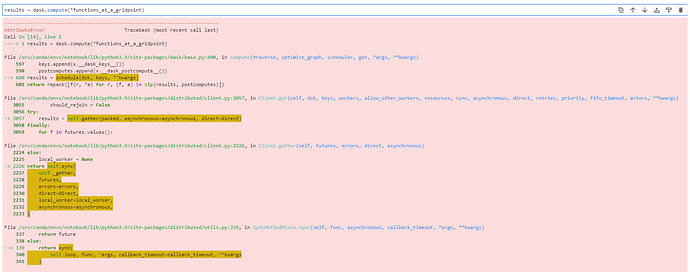

Running a “results = dask.compute(*list_of_delayed_functions)”-cell works, with the dask-processes fully visible in the dashboard. After a while the computation stops with the message AttributeError: 'EntryPoint' object has no attribute '_key.

Not familiar with this errormessage, and hope to receive directions or general advice.

- Rerunning the same computation-cell makes it running for a while again, but stops at a different function, so it is not clear that one specific function is triggering this error.

- The default notebook-environment is updated in the terminal with

conda update -n notebook --all

I could not fit the errormessage in one photo, but have copied the full message preformatted below.

Best,

Ola

---------------------------------------------------------------------------

AttributeError Traceback (most recent call last)

Cell In [14], line 1

----> 1 results = dask.compute(*functions_at_a_gridpoint)

File /srv/conda/envs/notebook/lib/python3.9/site-packages/dask/base.py:600, in compute(traverse, optimize_graph, scheduler, get, *args, **kwargs)

597 keys.append(x.__dask_keys__())

598 postcomputes.append(x.__dask_postcompute__())

--> 600 results = schedule(dsk, keys, **kwargs)

601 return repack([f(r, *a) for r, (f, a) in zip(results, postcomputes)])

File /srv/conda/envs/notebook/lib/python3.9/site-packages/distributed/client.py:3057, in Client.get(self, dsk, keys, workers, allow_other_workers, resources, sync, asynchronous, direct, retries, priority, fifo_timeout, actors, **kwargs)

3055 should_rejoin = False

3056 try:

-> 3057 results = self.gather(packed, asynchronous=asynchronous, direct=direct)

3058 finally:

3059 for f in futures.values():

File /srv/conda/envs/notebook/lib/python3.9/site-packages/distributed/client.py:2226, in Client.gather(self, futures, errors, direct, asynchronous)

2224 else:

2225 local_worker = None

-> 2226 return self.sync(

2227 self._gather,

2228 futures,

2229 errors=errors,

2230 direct=direct,

2231 local_worker=local_worker,

2232 asynchronous=asynchronous,

2233 )

File /srv/conda/envs/notebook/lib/python3.9/site-packages/distributed/utils.py:339, in SyncMethodMixin.sync(self, func, asynchronous, callback_timeout, *args, **kwargs)

337 return future

338 else:

--> 339 return sync(

340 self.loop, func, *args, callback_timeout=callback_timeout, **kwargs

341 )

File /srv/conda/envs/notebook/lib/python3.9/site-packages/distributed/utils.py:406, in sync(loop, func, callback_timeout, *args, **kwargs)

404 if error:

405 typ, exc, tb = error

--> 406 raise exc.with_traceback(tb)

407 else:

408 return result

File /srv/conda/envs/notebook/lib/python3.9/site-packages/distributed/utils.py:379, in sync.<locals>.f()

377 future = asyncio.wait_for(future, callback_timeout)

378 future = asyncio.ensure_future(future)

--> 379 result = yield future

380 except Exception:

381 error = sys.exc_info()

File /srv/conda/envs/notebook/lib/python3.9/site-packages/tornado/gen.py:762, in Runner.run(self)

759 exc_info = None

761 try:

--> 762 value = future.result()

763 except Exception:

764 exc_info = sys.exc_info()

File /srv/conda/envs/notebook/lib/python3.9/site-packages/distributed/client.py:2089, in Client._gather(self, futures, errors, direct, local_worker)

2087 exc = CancelledError(key)

2088 else:

-> 2089 raise exception.with_traceback(traceback)

2090 raise exc

2091 if errors == "skip":

Cell In [9], line 7, in optimize()

1 def optimize(lat1,lon1):

3 """

4 Computes gridded coefficients of fit based on basisfunctions and dynamic height data within a local window

5 """

----> 7 ds = xr.open_zarr(mapper, consolidated=True, chunks=None, decode_times=False)

8 distance = xr.apply_ufunc(great_circle_distance, lat1, lon1, ds.latitude.load(), ds.longitude.load())

9 ds_radius = ds.where(distance < window_size, drop=True)

File /srv/conda/envs/notebook/lib/python3.9/site-packages/xarray/backends/zarr.py:789, in open_zarr()

778 if kwargs:

779 raise TypeError(

780 "open_zarr() got unexpected keyword arguments " + ",".join(kwargs.keys())

781 )

783 backend_kwargs = {

784 "synchronizer": synchronizer,

785 "consolidated": consolidated,

786 "overwrite_encoded_chunks": overwrite_encoded_chunks,

787 "chunk_store": chunk_store,

788 "storage_options": storage_options,

--> 789 "stacklevel": 4,

790 }

792 ds = open_dataset(

793 filename_or_obj=store,

794 group=group,

(...)

805 use_cftime=use_cftime,

806 )

807 return ds

File /srv/conda/envs/notebook/lib/python3.9/site-packages/xarray/backends/api.py:517, in open_dataset()

514 if engine is None:

515 engine = plugins.guess_engine(filename_or_obj)

--> 517 backend = plugins.get_backend(engine)

519 decoders = _resolve_decoders_kwargs(

520 decode_cf,

521 open_backend_dataset_parameters=backend.open_dataset_parameters,

(...)

527 decode_coords=decode_coords,

528 )

530 overwrite_encoded_chunks = kwargs.pop("overwrite_encoded_chunks", None)

File /srv/conda/envs/notebook/lib/python3.9/site-packages/xarray/backends/plugins.py:161, in get_backend()

159 """Select open_dataset method based on current engine."""

160 if isinstance(engine, str):

--> 161 engines = list_engines()

162 if engine not in engines:

163 raise ValueError(

164 f"unrecognized engine {engine} must be one of: {list(engines)}"

165 )

File /srv/conda/envs/notebook/lib/python3.9/site-packages/xarray/backends/plugins.py:106, in list_engines()

104 else:

105 entrypoints = entry_points().get("xarray.backends", ())

--> 106 return build_engines(entrypoints)

File /srv/conda/envs/notebook/lib/python3.9/site-packages/xarray/backends/plugins.py:91, in build_engines()

89 if backend.available:

90 backend_entrypoints[backend_name] = backend

---> 91 entrypoints = remove_duplicates(entrypoints)

92 external_backend_entrypoints = backends_dict_from_pkg(entrypoints)

93 backend_entrypoints.update(external_backend_entrypoints)

File /srv/conda/envs/notebook/lib/python3.9/site-packages/xarray/backends/plugins.py:23, in remove_duplicates()

20 unique_entrypoints = []

21 for name, matches in entrypoints_grouped:

22 # remove equal entrypoints

---> 23 matches = list(set(matches))

24 unique_entrypoints.append(matches[0])

25 matches_len = len(matches)

File /srv/conda/envs/notebook/lib/python3.9/site-packages/setuptools/_vendor/importlib_metadata/__init__.py:239, in __eq__()

238 def __eq__(self, other):

--> 239 return self._key() == other._key()

AttributeError: 'EntryPoint' object has no attribute '_key'