Dear Pangeo community,

As you may know, Sentinel-2 rasters are distributed as JPEG2000.

If you try to crop a S2 raster remotely, you’ll find that a lot of requests will be issued, and thus the format can be quickly classified as “not cloud native”, in contrast to COG, Zarr, etc.

This might be why the format was not even mentioned in the recent discussion about raster file formats.

A weak definition of “cloud optimized” for imagery rasters could be:

- individual tile access, to allow partial read,

- easy localization of any tile, to issue a single range request to retrieve it.

Point 1. is easy to verify. Using gdalinfo, one can check that the bands have Block=NxM specified (e.g. Block=1024x1024 for S2 rasters).

Point 2. is more tricky, and requires more knowledge about the data format.

TIFF uses the tags TileOffsets and TileByteCounts, Zarr uses filenames to reflect the chunk id. For JPEG2000, there is a optional TLM field in the main header for this purpose.

Until recently, the TLM markers were not considered by OpenJPEG to optimized decoding, but this has been solved in the 2.5.3 release.

So why is remote partial reading Sentinel-2 rasters so slow? It’s because the TLM option was never enabled!

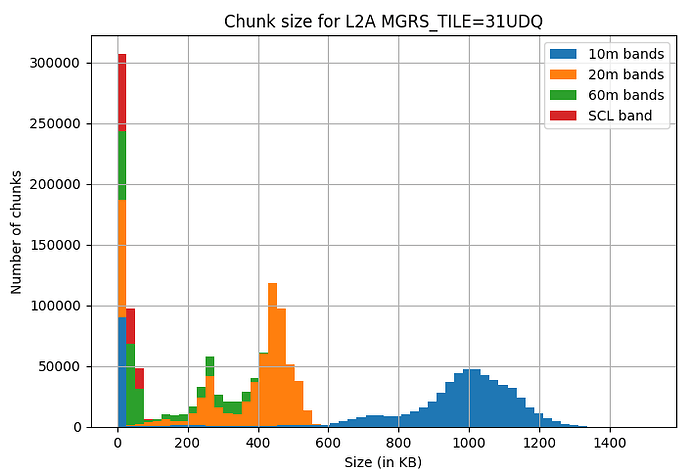

We have performed simple benchmarks (see GitHub - Kayrros/sentinel-2-jp2-tlm), and it shows the enabling the option for future products would make Sentinel-2 imagery “cloud native”, with similar performances as COG or Zarr, while requiring only a minor change to the format.

What about the archive data, which is all online on CDSE S3 but inefficient to access because of the lack of TLMs?

We developped a “TLM indexer” to pre-compute the TLM tables on all historical data (partially public here in the github above), and based on a suggestion by Even Rouault to use GDAL’s /vsisparse/, we can inject them on the fly when doing a partial read (Python package “jp2io” in development in the same github repository).

In short: highly efficient partial access to Sentinel-2 rasters is possible without converting the collections to COG or Zarr!

We are currently working on indexing the full archive and plan to make the indexes available publicly somehow, and we are also trying to get ESA to enable the TLM option for future products.

What do you think of this approach?

Also I’m not too familiar in Kerchunk/VirtualiZarr, but I believe it should be possible to make a virtual datacube of Sentinel-2, exploiting the data in JPEG2000 on CDSE with no modification + the TLM indexes. I’d love to get some feedback on this.